Recently, Facebook has been criticized for reportedly affecting the election results, due to the website not filtering news pages containing fake information or spam.

When Facebook tried to regulate these sites, it was again criticized because the moderators would categorize right-wing sites as spam, while in reality, they could have been perfectly valid even if the information could be easily deemed as undesirable. Mark Zuckerberg assures that Facebook takes misinformation seriously, as one of the platform’s main objectives is to connect people “with the stories they find most meaningful, and we know people want accurate information.”

What of what you see on Facebook is ‘true’?

So far, Facebook has relied on its users to flag content that can be considered to be fake or misleading, but it appears that there is still much to do to solve this issue, which has major philosophical implications for how people get their news on the Internet.

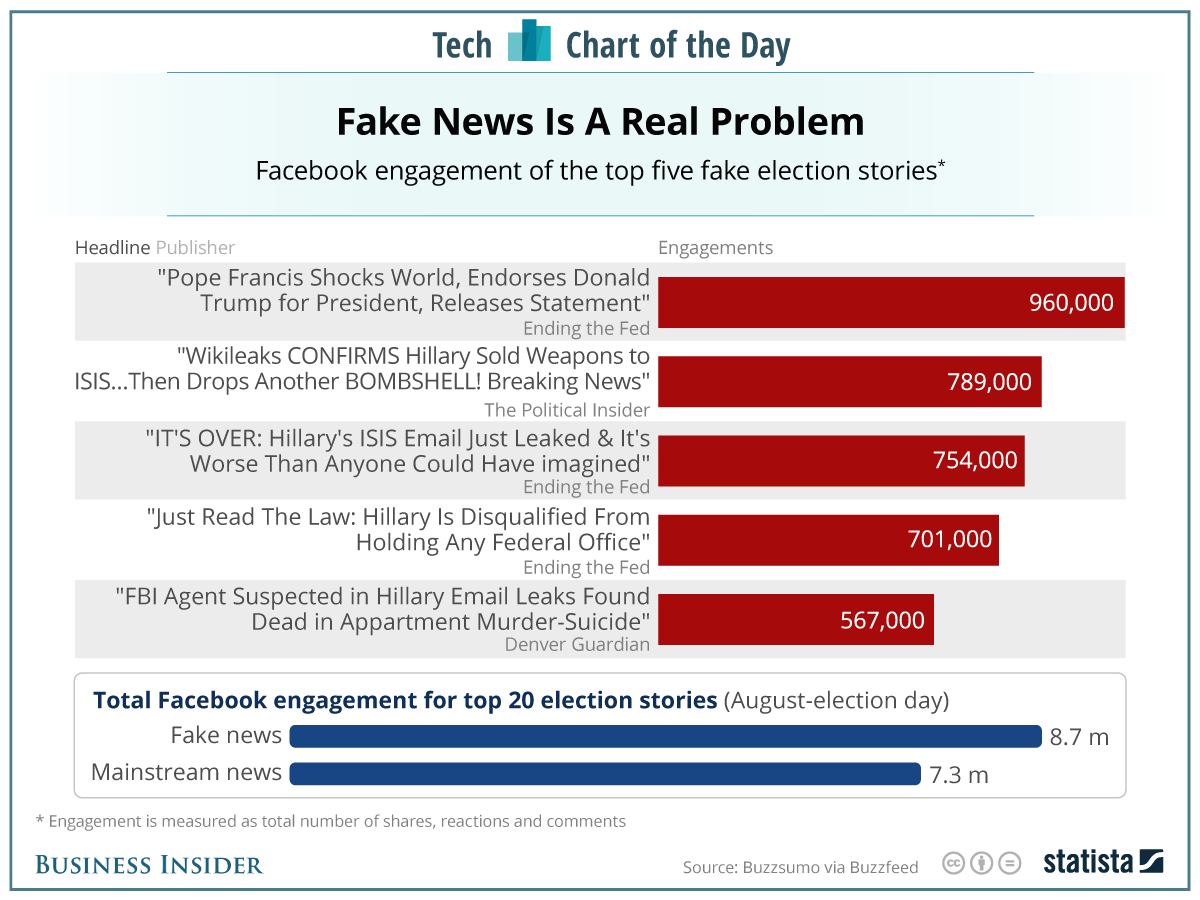

According to the Pew Research Center, 62 percent of American adults get their news on social media, and most of them rely on a single site for doing so. Even if Zuckerberg assures that 99 percent of what people see on Facebook is authentic, it is still possible for fake news to make their way on a user’s News Feed.

As Trump came closer to win the election, Facebook top officials privately discussed how the company had influenced the decision of voters. On the following days, the company was accused of helping spread misinformation since it ceased to moderate content thoroughly. It is not yet clear exactly how information degraded in such a way that content shared on Facebook could change a person’s voting disposition.

Some argue that Facebook’s algorithm bubbles users with similar beliefs, while others blame the lack of editorial effort in filtering content considered to be misleading or harmful. In the latter issue, some of the content that is flagged by users as fake or misleading can pass through another person’s feed without notice, or even gaining a “Share” or a “Like.” It all depends on how the user interacts with the content as it is presented.

The solution is not anywhere near to be found

Currently, Facebook is looking for advice with fact checking organizations and journalists to draft a solution that would let the algorithm determine which news pages are truthful, even if its content may be looked down upon a significant group of users.

The company will improve its reporting mechanisms so users can react promptly to the content they see on their feeds, perhaps even asking them to rate a page based on the fidelity of its content. The algorithm will also be improved to predict what pages users will probably flag as inappropriate.

Facebook must envision a way to gauge an article’s quality, which is expected to occur in the “related articles” section that appears under the links published in a user’s News Feed. The tough part is making the process consistent and optimized, seeing that user moderation is not fully reliable, and neither is editorial moderation coming from Facebook itself.

Because bias will always exist on news sites, it takes a tremendous effort to devise a comprehensive news analysis system that can determine which pages can be of interest to a certain group of people. This process must also consider that among that group, someone could be interested in content that the algorithm rules out of consideration by default.

Going as far as showing people what content are they interested in is a broader issue than the fake news problem that Facebook is currently facing. As it stands, Facebook does not mind about forbidding specific types of content appearing on a user’s feed as long as it is truthful.

“We believe in giving people a voice, which means erring on the side of letting people share what they want whenever possible. We need to be careful not to discourage sharing of opinions or to mistakenly restrict accurate content. We do not want to be arbiters of truth ourselves, but instead, rely on our community and trusted third parties,” wrote Mark Zuckerberg on his Facebook wall.

The idea is that people can and should share whatever they desire, but always keeping in mind that opinion does not necessarily weight well against the hard truth. Radical websites whose content is just marginally acceptable for Facebook’s terms and conditions are desirable for specific people, and just like everyone else, they should have the right to share, comment, and like all of these pages even if their views are unpleasant for everyone else.

Facebook is becoming a place where ideas and people converge, which leads to new patterns of thought. Some may argue that because Donald Trump won, Americans are looking for a scapegoat to blame it upon. The truth is that, in the current reality, Trump was elected as the next President of the United States with all its flaws and divisive ideas.

Political correctness has come to a point where the people themselves have elected someone who personifies the complete opposite of being correct. It is important to understand that this is not “being wrong” or “being false,” but rather what people have chosen to believe.

Source: Facebook